Experts Warn Social Media Is Pushing Distressing News to Children

3 min read

Survey reveals TikTok and Instagram feeds are exposing young users to violent, graphic and traumatic news content.

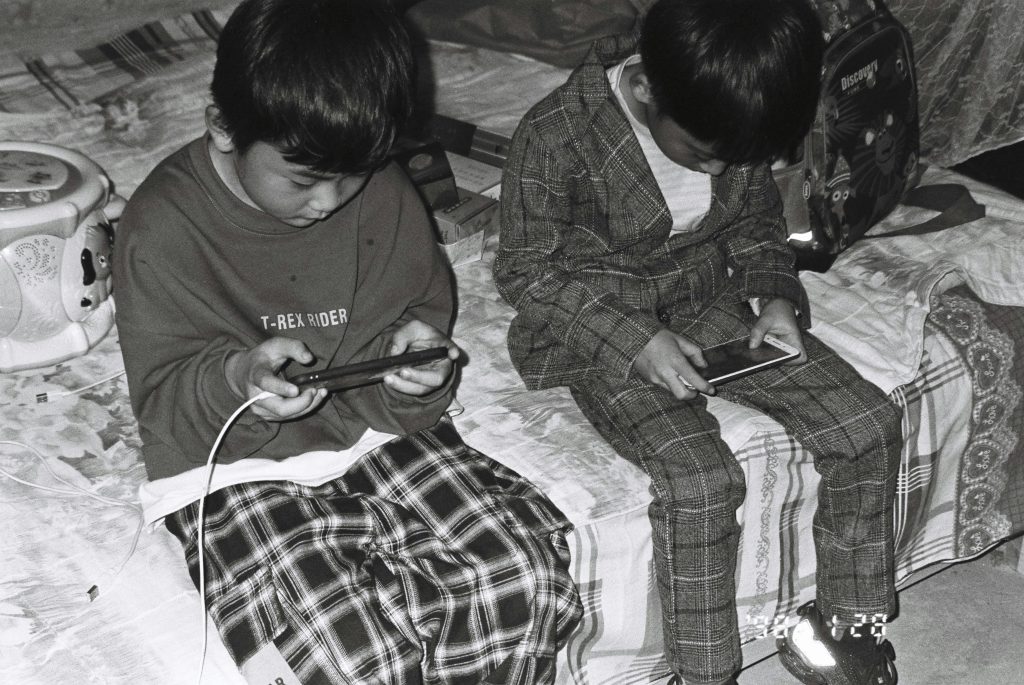

A new report by online safety organization Internet Matters has found that more than half of children who get their news from social media are being left worried and upset after viewing distressing videos involving war, shootings, stabbings and car crashes.

The findings suggest that algorithms on platforms such as TikTok and Instagram are “pushing” graphic news content to children who are not intentionally seeking it, raising fresh concerns about the psychological impact of online media exposure.

Recent disturbing videos including the murder of Charlie Kirk the Liverpool parade car-ramming attack, and footage from wars and violent crimes have appeared on the feeds of children as young as 11.

According to Internet Matters, 39% of children who viewed such content said they felt “very or extremely upset.” One 14-year-old girl told researchers:

“On TikTok, you can see stabbings and kidnappings, which are just not nice to see. It makes you feel uncomfortable.”

Another teenager, 17, recalled:

“On Instagram, there are often stabbing videos before they’re taken down. When Liam Payne died, there was video footage online. I thought it wasn’t very nice there should’ve been a trigger warning.”

The report revealed that two-thirds of children get their news through social media, yet 40% don’t follow any news accounts. Instead, they encounter content through automated recommendations.

Omega Tv UK celebrates ONE YEAR ANNIVERSARY, we wish to thank all our viewers for helping us reach this milestone.

Happy 1st anniversary to Omega TV UK!.

More than 61% of young people said they had seen a worrying or upsetting story in the past month. Experts say this shows how platforms’ design prioritizing engagement can lead to harmful, unsolicited exposure to disturbing material.

The study highlights a shift in how social media operates. Users now spend less time viewing friends’ posts and more time watching algorithmically recommended content.

Meta, the parent company of Instagram, admitted in April that only 8% of user time is now spent on posts from friends a drop of over one-third since 2023. This change has been linked to the rise of the so-called “brain rot” phenomenon, where users feel mentally drained by endless, emotionally charged content.

Alarmingly, 86% of children surveyed said they do not know how to reset or control the algorithm that determines what they see leaving them vulnerable to upsetting material.

Rachel Huggins, co-chief executive of Internet Matters, warned that the shift away from traditional news sources is “radically changing how children consume information.”

“The algorithmic design of social media platforms is enabling children to see negative and upsetting content and delivering it to millions who aren’t even looking for it,” she said.

The organization, supported in part by tech companies including TikTok, is urging parents to talk openly with children about what they see online and to make use of content filters and parental controls.

Chi Onwurah, the Labour MP who chairs the Commons science and technology committee, said the findings show that the UK’s Online Safety Act “isn’t up to scratch.”

“We need a stronger regime that discourages viral misinformation, regulates generative AI, and enforces clear standards on social media companies,” Onwurah stated.

The Act requires tech firms to give children age-appropriate access to content and to block material depicting or promoting serious violence. However, Internet Matters says that even 11- to 12-year-olds are viewing war and crime content a violation of most platforms’ own 13+ age policy.

TikTok said that gory and disturbing material is banned from its “For You” feed, including depictions of blood or extreme fighting. The company claims to use independent fact-checkers and provides parental controls that allow guardians to block usage at certain times and filter specific content.

Despite these measures, experts argue that the volume and speed of content uploaded daily make enforcement difficult leaving children exposed before harmful videos are detected or removed.